pix2pix-zero

Maintainer: cjwbw

5

| Property | Value |

|---|---|

| Model Link | View on Replicate |

| API Spec | View on Replicate |

| Github Link | View on Github |

| Paper Link | No paper link provided |

Get summaries of the top AI models delivered straight to your inbox:

Model overview

pix2pix-zero is a diffusion-based image-to-image model developed by researcher cjwbw that enables zero-shot image translation. Unlike traditional image-to-image translation models that require fine-tuning for each task, pix2pix-zero can directly use a pre-trained Stable Diffusion model to edit real and synthetic images while preserving the input image's structure. This approach is training-free and prompt-free, removing the need for manual text prompting or costly fine-tuning.

The model is similar to other works such as pix2struct and daclip-uir in its focus on leveraging pre-trained vision-language models for efficient image editing and manipulation. However, pix2pix-zero stands out by enabling a wide range of zero-shot editing capabilities without requiring any text input or model fine-tuning.

Model inputs and outputs

pix2pix-zero takes an input image and a specified editing task (e.g., "cat to dog") and outputs the edited image. The model does not require any text prompts or fine-tuning for the specific task, making it a versatile and efficient tool for image-to-image translation.

Inputs

- Image: The input image to be edited

- Task: The desired editing direction, such as "cat to dog" or "zebra to horse"

- Xa Guidance: A parameter that controls the amount of cross-attention guidance applied during the editing process

- Use Float 16: A flag to enable the use of half-precision (float16) computation for reduced VRAM requirements

- Num Inference Steps: The number of denoising steps to perform during the editing process

- Negative Guidance Scale: A parameter that controls the influence of the negative guidance during the editing process

Outputs

- Edited Image: The output image with the specified editing applied, while preserving the structure of the input image

Capabilities

pix2pix-zero demonstrates impressive zero-shot image-to-image translation capabilities, allowing users to apply a wide range of edits to both real and synthetic images without the need for manual text prompting or costly fine-tuning. The model can seamlessly translate between various visual concepts, such as "cat to dog", "zebra to horse", and "tree to fall", while maintaining the overall structure and composition of the input image.

What can I use it for?

The pix2pix-zero model can be a powerful tool for a variety of image editing and manipulation tasks. Some potential use cases include:

- Creative photo editing: Quickly apply creative edits to existing photos, such as transforming a cat into a dog or a zebra into a horse, without the need for manual editing.

- Data augmentation: Generate diverse synthetic datasets for machine learning tasks by applying various zero-shot transformations to existing images.

- Accessibility and inclusivity: Assist users with visual impairments by enabling zero-shot edits that can make images more accessible, such as transforming images of cats to dogs for users who prefer canines.

- Prototyping and ideation: Rapidly explore different design concepts or product ideas by applying zero-shot edits to existing images or synthetic assets.

Things to try

One interesting aspect of pix2pix-zero is its ability to preserve the structure and composition of the input image while applying the desired edit. This can be particularly useful when working with real-world photographs, where maintaining the overall integrity of the image is crucial.

You can experiment with adjusting the xa_guidance parameter to find the right balance between preserving the input structure and achieving the desired editing outcome. Increasing the xa_guidance value can help maintain more of the input image's structure, while decreasing it can result in more dramatic transformations.

Additionally, the model's versatility allows you to explore a wide range of editing directions beyond the examples provided. Try experimenting with different combinations of source and target concepts, such as "tree to flower" or "car to boat", to see the model's capabilities in action.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

⛏️

text2video-zero

40

The text2video-zero model, developed by cjwbw from Picsart AI Research, leverages the power of existing text-to-image synthesis methods, like Stable Diffusion, to enable zero-shot video generation. This means the model can generate videos directly from text prompts without any additional training or fine-tuning. The model is capable of producing temporally consistent videos that closely follow the provided textual guidance. The text2video-zero model is related to other text-guided diffusion models like Clip-Guided Diffusion and TextDiffuser, which explore various techniques for using diffusion models as text-to-image and text-to-video generators. Model Inputs and Outputs Inputs Prompt**: The textual description of the desired video content. Model Name**: The Stable Diffusion model to use as the base for video generation. Timestep T0 and T1**: The range of DDPM steps to perform, controlling the level of variance between frames. Motion Field Strength X and Y**: Parameters that control the amount of motion applied to the generated frames. Video Length**: The desired duration of the output video. Seed**: An optional random seed to ensure reproducibility. Outputs Video**: The generated video file based on the provided prompt and parameters. Capabilities The text2video-zero model can generate a wide variety of videos from text prompts, including scenes with animals, people, and fantastical elements. For example, it can produce videos of "a horse galloping on a street", "a panda surfing on a wakeboard", or "an astronaut dancing in outer space". The model is able to capture the movement and dynamics of the described scenes, resulting in temporally consistent and visually compelling videos. What can I use it for? The text2video-zero model can be useful for a variety of applications, such as: Generating video content for social media, marketing, or entertainment purposes. Prototyping and visualizing ideas or concepts that can be described in text form. Experimenting with creative video generation and exploring the boundaries of what is possible with AI-powered video synthesis. Things to try One interesting aspect of the text2video-zero model is its ability to incorporate additional guidance, such as poses or edges, to further influence the generated video. By providing a reference video or image with canny edges, the model can generate videos that closely follow the visual structure of the guidance, while still adhering to the textual prompt. Another intriguing feature is the model's support for Dreambooth specialization, which allows you to fine-tune the model on a specific visual style or character. This can be used to generate videos that have a distinct artistic or stylistic flair, such as "an astronaut dancing in the style of Van Gogh's Starry Night".

Updated Invalid Date

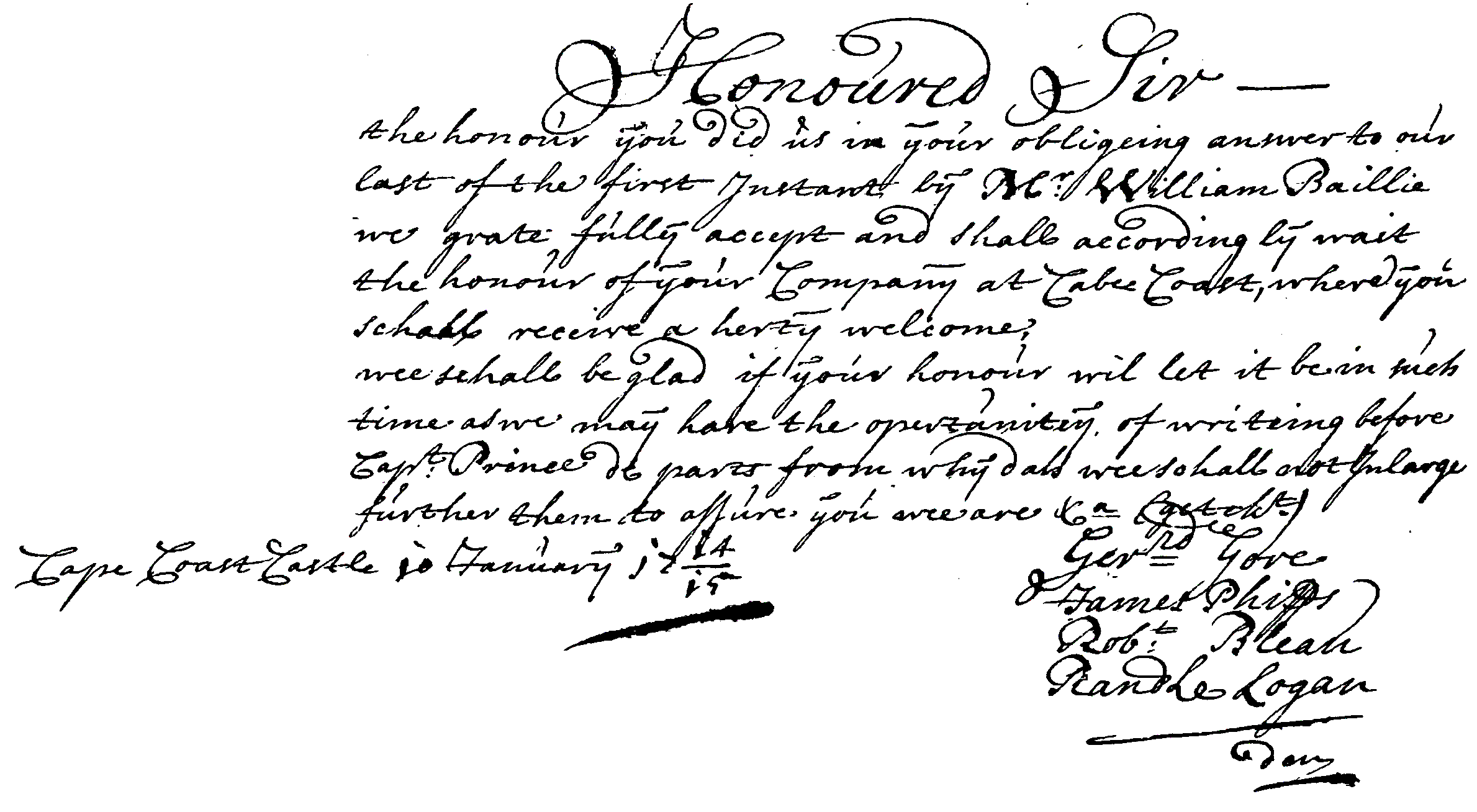

docentr

2

The docentr model is an end-to-end document image enhancement transformer developed by cjwbw. It is a PyTorch implementation of the paper "DocEnTr: An End-to-End Document Image Enhancement Transformer" and is built on top of the vit-pytorch vision transformers library. The model is designed to enhance and binarize degraded document images, as demonstrated in the provided examples. Model inputs and outputs The docentr model takes an image as input and produces an enhanced, binarized output image. The input image can be a degraded or low-quality document, and the model aims to improve its visual quality by performing tasks such as binarization, noise removal, and contrast enhancement. Inputs image**: The input image, which should be in a valid image format (e.g., PNG, JPEG). Outputs Output**: The enhanced, binarized output image. Capabilities The docentr model is capable of performing end-to-end document image enhancement, including binarization, noise removal, and contrast improvement. It can be used to improve the visual quality of degraded or low-quality document images, making them more readable and easier to process. The model has shown promising results on benchmark datasets such as DIBCO, H-DIBCO, and PALM. What can I use it for? The docentr model can be useful for a variety of applications that involve processing and analyzing document images, such as optical character recognition (OCR), document archiving, and image-based document retrieval. By enhancing the quality of the input images, the model can help improve the accuracy and reliability of downstream tasks. Additionally, the model's capabilities can be leveraged in projects related to document digitization, historical document restoration, and automated document processing workflows. Things to try You can experiment with the docentr model by testing it on your own degraded document images and observing the binarization and enhancement results. The model is also available as a pre-trained Replicate model, which you can use to quickly apply the image enhancement without training the model yourself. Additionally, you can explore the provided demo notebook to gain a better understanding of how to use the model and customize its configurations.

Updated Invalid Date

rembg

5.4K

rembg is an AI model developed by cjwbw that can remove the background from images. It is similar to other background removal models like rmgb, rembg, background_remover, and remove_bg, all of which aim to separate the subject from the background in an image. Model inputs and outputs The rembg model takes an image as input and outputs a new image with the background removed. This can be a useful preprocessing step for various computer vision tasks, like object detection or image segmentation. Inputs Image**: The input image to have its background removed. Outputs Output**: The image with the background removed. Capabilities The rembg model can effectively remove the background from a wide variety of images, including portraits, product shots, and nature scenes. It is trained to work well on complex backgrounds and can handle partial occlusions or overlapping objects. What can I use it for? You can use rembg to prepare images for further processing, such as creating cut-outs for design work, enhancing product photography, or improving the performance of other computer vision models. For example, you could use it to extract the subject of an image and overlay it on a new background, or to remove distracting elements from an image before running an object detection algorithm. Things to try One interesting thing to try with rembg is using it on images with multiple subjects or complex backgrounds. See how it handles separating individual elements and preserving fine details. You can also experiment with using the model's output as input to other computer vision tasks, like image segmentation or object tracking, to see how it impacts the performance of those models.

Updated Invalid Date

pix2struct

5

pix2struct is a powerful image-to-text model developed by researchers at Google. It uses a novel pretraining strategy, learning to parse masked screenshots of web pages into simplified HTML. This approach allows the model to learn a general understanding of visually-situated language, which can then be fine-tuned on a variety of downstream tasks. The model is related to other visual language models developed by the same team, such as pix2struct-base and cogvlm. These models share similar architectures and pretraining objectives, aiming to create versatile foundations for understanding the interplay between images and text. Model inputs and outputs Inputs Text**: Input text for the model to process Image**: Input image for the model to analyze Model name**: The specific pix2struct model to use, e.g. screen2words Outputs Output**: The model's generated response, which could be a caption, a structured representation, or an answer to a question, depending on the specific task. Capabilities pix2struct is a highly capable model that can be applied to a wide range of visual language understanding tasks. It has demonstrated state-of-the-art performance on a variety of benchmarks, including documents, illustrations, user interfaces, and natural images. The model's ability to learn from web-based data makes it well-suited for handling the diversity of visually-situated language found in the real world. What can I use it for? pix2struct can be used for a variety of applications that involve understanding the relationship between images and text, such as: Image Captioning**: Generating descriptive captions for images Visual Question Answering**: Answering questions about the content of an image Document Understanding**: Extracting structured information from document images User Interface Analysis**: Parsing and understanding the layout and functionality of user interface screenshots Given its broad capabilities, pix2struct could be a valuable tool for developers, researchers, and businesses working on projects that require visually-grounded language understanding. Things to try One interesting aspect of pix2struct is its flexible integration of language and vision inputs. The model can accept language prompts, such as questions, that are rendered directly on top of the input image. This allows for more nuanced and interactive task formulations, where the model can reason about the image in the context of a specific query or instruction. Developers and researchers could explore this feature to create novel applications that blend image analysis and language understanding in creative ways. For example, building interactive visual assistants that can answer questions about the contents of an image or provide guidance based on a user's instructions.

Updated Invalid Date