clip-guided-diffusion

Maintainer: cjwbw

4

| Property | Value |

|---|---|

| Model Link | View on Replicate |

| API Spec | View on Replicate |

| Github Link | View on Github |

| Paper Link | No paper link provided |

Get summaries of the top AI models delivered straight to your inbox:

Model overview

clip-guided-diffusion is a Cog implementation of the CLIP Guided Diffusion model, originally developed by Katherine Crowson. This model leverages the CLIP (Contrastive Language-Image Pre-training) technique to guide the image generation process, allowing for more semantically meaningful and visually coherent outputs compared to traditional diffusion models. Unlike the Stable Diffusion model, which is trained on a large and diverse dataset, clip-guided-diffusion is focused on generating images from text prompts in a more targeted and controlled manner.

Model inputs and outputs

The clip-guided-diffusion model takes a text prompt as input and generates a set of images as output. The text prompt can be anything from a simple description to a more complex, imaginative scenario. The model then uses the CLIP technique to guide the diffusion process, resulting in images that closely match the semantic content of the input prompt.

Inputs

- Prompt: The text prompt that describes the desired image.

- Timesteps: The number of diffusion steps to use during the image generation process.

- Display Frequency: The frequency at which the intermediate image outputs should be displayed.

Outputs

- Array of Image URLs: The generated images, each represented as a URL.

Capabilities

The clip-guided-diffusion model is capable of generating a wide range of images based on text prompts, from realistic scenes to more abstract and imaginative compositions. Unlike the more general-purpose Stable Diffusion model, clip-guided-diffusion is designed to produce images that are more closely aligned with the semantic content of the input prompt, resulting in a more targeted and coherent output.

What can I use it for?

The clip-guided-diffusion model can be used for a variety of applications, including:

- Content Generation: Create unique, custom images to use in marketing materials, social media posts, or other visual content.

- Prototyping and Visualization: Quickly generate visual concepts and ideas based on textual descriptions, which can be useful in fields like design, product development, and architecture.

- Creative Exploration: Experiment with different text prompts to generate unexpected and imaginative images, opening up new creative possibilities.

Things to try

One interesting aspect of the clip-guided-diffusion model is its ability to generate images that capture the nuanced semantics of the input prompt. Try experimenting with prompts that contain specific details or evocative language, and observe how the model translates these textual descriptions into visually compelling outputs. Additionally, you can explore the model's capabilities by comparing its results to those of other diffusion-based models, such as Stable Diffusion or DiffusionCLIP, to understand the unique strengths and characteristics of the clip-guided-diffusion approach.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

stable-diffusion-2-1-unclip

2

The stable-diffusion-2-1-unclip model, created by cjwbw, is a text-to-image diffusion model that can generate photo-realistic images from text prompts. This model builds upon the foundational Stable Diffusion model, incorporating enhancements and new capabilities. Compared to similar models like Stable Diffusion Videos and Stable Diffusion Inpainting, the stable-diffusion-2-1-unclip model offers unique features and capabilities tailored to specific use cases. Model inputs and outputs The stable-diffusion-2-1-unclip model takes a variety of inputs, including an input image, a seed value, a scheduler, the number of outputs, the guidance scale, and the number of inference steps. These inputs allow users to fine-tune the image generation process and achieve their desired results. Inputs Image**: The input image that the model will use as a starting point for generating new images. Seed**: A random seed value that can be used to ensure reproducible image generation. Scheduler**: The scheduling algorithm used to control the diffusion process. Num Outputs**: The number of images to generate. Guidance Scale**: The scale for classifier-free guidance, which controls the balance between the input text prompt and the model's own learned distribution. Num Inference Steps**: The number of denoising steps to perform during the image generation process. Outputs Output Images**: The generated images, represented as a list of image URLs. Capabilities The stable-diffusion-2-1-unclip model is capable of generating a wide range of photo-realistic images from text prompts. It can create images of diverse subjects, including landscapes, portraits, and abstract scenes, with a high level of detail and realism. The model also demonstrates improved performance in areas like image inpainting and video generation compared to earlier versions of Stable Diffusion. What can I use it for? The stable-diffusion-2-1-unclip model can be used for a variety of applications, such as digital art creation, product visualization, and content generation for social media and marketing. Its ability to generate high-quality images from text prompts makes it a powerful tool for creative professionals, hobbyists, and businesses looking to streamline their visual content creation workflows. With its versatility and continued development, the stable-diffusion-2-1-unclip model represents an exciting advancement in the field of text-to-image AI. Things to try One interesting aspect of the stable-diffusion-2-1-unclip model is its ability to generate images with a unique and distinctive style. By experimenting with different input prompts and model parameters, users can explore the model's range and create images that evoke specific moods, emotions, or artistic sensibilities. Additionally, the model's strong performance in areas like image inpainting and video generation opens up new creative possibilities for users to explore.

Updated Invalid Date

diffusionclip

5

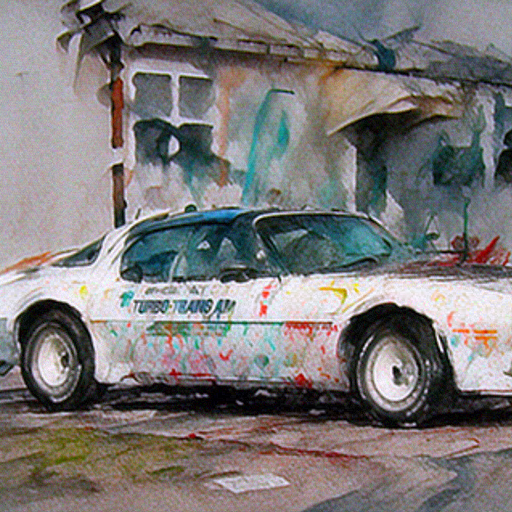

DiffusionCLIP is a novel method that performs text-driven image manipulation using diffusion models. It was proposed by Gwanghyun Kim, Taesung Kwon, and Jong Chul Ye in their CVPR 2022 paper. Unlike prior GAN-based approaches, DiffusionCLIP leverages the full inversion capability and high-quality image generation power of recent diffusion models to enable zero-shot image manipulation, even between unseen domains. This allows for robust and faithful manipulation of real images, going beyond the limited capabilities of GAN inversion methods. DiffusionCLIP is similar in spirit to other text-guided image manipulation models like StyleCLIP and StyleGAN-NADA, but with key technical differences enabled by its diffusion-based approach. Model inputs and outputs Inputs Image**: An input image to be manipulated. Edit type**: The desired attribute or style to apply to the input image (e.g. "ImageNet style transfer - Watercolor art"). Manipulation**: The type of manipulation to perform (e.g. "ImageNet style transfer"). Degree of change**: The intensity or amount of the desired edit, from 0 (no change) to 1 (maximum change). N test step**: The number of steps to use in the image generation process, between 5 and 100. Outputs Output image**: The manipulated image, with the desired attribute or style applied. Capabilities DiffusionCLIP enables high-quality, zero-shot image manipulation even on real-world images from diverse datasets like ImageNet. It can accurately edit images while preserving the original identity and content, unlike prior GAN-based approaches. The model also supports multi-attribute manipulation by blending noise from multiple fine-tuned models. Additionally, DiffusionCLIP can translate images between unseen domains, generating new images from scratch based on text prompts. What can I use it for? DiffusionCLIP can be a powerful tool for a variety of image editing and generation tasks. Its ability to manipulate real-world images in diverse domains makes it suitable for applications like photo editing, digital art creation, and even product visualization. Businesses could leverage DiffusionCLIP to quickly generate product mockups or visualizations based on textual descriptions. Creators could use it to explore creative possibilities by manipulating images in unexpected ways guided by text prompts. Things to try One interesting aspect of DiffusionCLIP is its ability to translate images between unseen domains, such as generating a "watercolor art" version of an input image. Try experimenting with different text prompts to see how the model can transform images in surprising ways, going beyond simple attribute edits. You could also explore the model's multi-attribute manipulation capabilities, blending different text-guided changes to create unique hybrid outputs.

Updated Invalid Date

clip-guided-diffusion-pokemon

4

clip-guided-diffusion-pokemon is a Cog implementation of a diffusion model trained on Pokémon sprites, allowing users to generate unique pixel art Pokémon from text prompts. This model builds upon the work of the CLIP-Guided Diffusion model, which uses CLIP to guide the diffusion process for image generation. By focusing the model on Pokémon sprites, the clip-guided-diffusion-pokemon model is able to produce highly detailed and accurate Pokémon-inspired pixel art. Model inputs and outputs The clip-guided-diffusion-pokemon model takes a single input - a text prompt describing the desired Pokémon. The model then generates a set of images that match the prompt, returning the images as a list of file URLs and accompanying text descriptions. Inputs prompt**: A text prompt describing the Pokémon you want to generate, e.g. "a pokemon resembling ♲ #pixelart" Outputs file**: A URL pointing to the generated Pokémon sprite image text**: A text description of the generated Pokémon image Capabilities The clip-guided-diffusion-pokemon model is capable of generating a wide variety of Pokémon-inspired pixel art images from text prompts. The model is able to capture the distinctive visual style of Pokémon sprites, while also incorporating elements specified in the prompt such as unique color palettes or anatomical features. What can I use it for? With the clip-guided-diffusion-pokemon model, you can create custom Pokémon for use in games, fan art, or other creative projects. The model's ability to generate unique Pokémon sprites from text prompts makes it a powerful tool for Pokémon enthusiasts, game developers, and digital artists. You could potentially monetize the model by offering custom Pokémon sprite generation as a service to clients. Things to try One interesting aspect of the clip-guided-diffusion-pokemon model is its ability to generate Pokémon with unique or unconventional designs. Try experimenting with prompts that combine Pokémon features in unexpected ways, or that introduce fantastical or surreal elements. You could also try using the model to generate Pokémon sprites for entirely new regions or evolutionary lines, expanding the Pokémon universe in creative ways.

Updated Invalid Date

textdiffuser

1

textdiffuser is a diffusion model created by Replicate contributor cjwbw. It is similar to other powerful text-to-image models like stable-diffusion, latent-diffusion-text2img, and stable-diffusion-v2. These models use diffusion techniques to transform text prompts into detailed, photorealistic images. Model inputs and outputs The textdiffuser model takes a text prompt as input and generates one or more corresponding images. The key input parameters are: Inputs Prompt**: The text prompt describing the desired image Seed**: A random seed value to control the image generation Guidance Scale**: A parameter that controls the influence of the text prompt on the generated image Num Inference Steps**: The number of denoising steps to perform during image generation Outputs Output Images**: One or more generated images corresponding to the input text prompt Capabilities textdiffuser can generate a wide variety of photorealistic images from text prompts, ranging from scenes and objects to abstract art and stylized depictions. The quality and fidelity of the generated images are highly impressive, often rivaling or exceeding human-created artwork. What can I use it for? textdiffuser and similar diffusion models have a wealth of potential applications, from creative tasks like art and illustration to product visualization, scene generation for games and films, and much more. Businesses could use these models to rapidly prototype product designs, create promotional materials, or generate custom images for marketing campaigns. Creatives could leverage them to ideate and explore new artistic concepts, or to bring their visions to life in novel ways. Things to try One interesting aspect of textdiffuser and related models is their ability to capture and reproduce specific artistic styles, as demonstrated by the van-gogh-diffusion model. Experimenting with different styles, genres, and creative prompts can yield fascinating and unexpected results. Additionally, the clip-guided-diffusion model offers a unique approach to image generation that could be worth exploring further.

Updated Invalid Date