Salesforce

Models by this creator

blip

80.4K

BLIP (Bootstrapping Language-Image Pre-training) is a vision-language model developed by Salesforce that can be used for a variety of tasks, including image captioning, visual question answering, and image-text retrieval. The model is pre-trained on a large dataset of image-text pairs and can be fine-tuned for specific tasks. Compared to similar models like blip-vqa-base, blip-image-captioning-large, and blip-image-captioning-base, BLIP is a more general-purpose model that can be used for a wider range of vision-language tasks. Model inputs and outputs BLIP takes in an image and either a caption or a question as input, and generates an output response. The model can be used for both conditional and unconditional image captioning, as well as open-ended visual question answering. Inputs Image**: An image to be processed Caption**: A caption for the image (for image-text matching tasks) Question**: A question about the image (for visual question answering tasks) Outputs Caption**: A generated caption for the input image Answer**: An answer to the input question about the image Capabilities BLIP is capable of generating high-quality captions for images and answering questions about the visual content of images. The model has been shown to achieve state-of-the-art results on a range of vision-language tasks, including image-text retrieval, image captioning, and visual question answering. What can I use it for? You can use BLIP for a variety of applications that involve processing and understanding visual and textual information, such as: Image captioning**: Generate descriptive captions for images, which can be useful for accessibility, image search, and content moderation. Visual question answering**: Answer questions about the content of images, which can be useful for building interactive interfaces and automating customer support. Image-text retrieval**: Find relevant images based on textual queries, or find relevant text based on visual input, which can be useful for building image search engines and content recommendation systems. Things to try One interesting aspect of BLIP is its ability to perform zero-shot video-text retrieval, where the model can directly transfer its understanding of vision-language relationships to the video domain without any additional training. This suggests that the model has learned rich and generalizable representations of visual and textual information that can be applied to a variety of tasks and modalities. Another interesting capability of BLIP is its use of a "bootstrap" approach to pre-training, where the model first generates synthetic captions for web-scraped image-text pairs and then filters out the noisy captions. This allows the model to effectively utilize large-scale web data, which is a common source of supervision for vision-language models, while mitigating the impact of noisy or irrelevant image-text pairs.

Updated 5/10/2024

albef

3

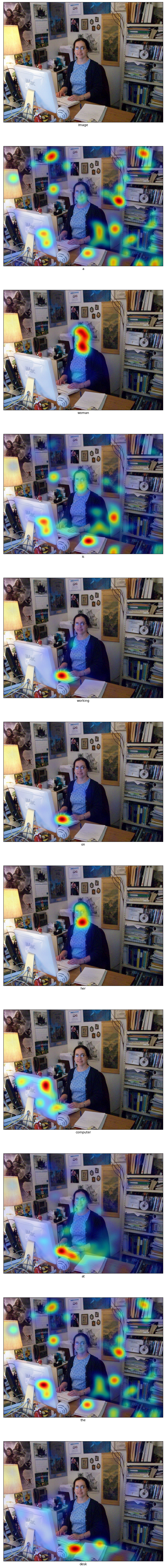

The albef model, developed by the Salesforce research team, is a vision-language representation learning model that uses a momentum distillation approach to align visual and textual features before fusing them. This unique approach sets it apart from similar models like BLIP and Stable Diffusion, which use different techniques for integrating visual and textual information. Model inputs and outputs The albef model takes two primary inputs: an image and a caption. The image can be provided as a URI, and the caption is a string of text describing the image. The model then outputs a grad-CAM visualization, which highlights the important areas of the image that correspond to each word in the caption. Inputs Image**: The input image, provided as a URI. Caption**: The caption describing the image, provided as a string of text. Outputs Grad-CAM Visualization**: A visual output highlighting the important regions of the image for each word in the caption. Capabilities The albef model is designed to explore the alignment between visual and textual representations, which is a fundamental challenge in the field of vision-language understanding. By using a momentum distillation approach, the model is able to capture the semantic connections between images and their descriptions more effectively than some previous approaches. What can I use it for? The albef model and its associated techniques could be useful for a variety of applications that require understanding the relationship between visual and textual data, such as image captioning, visual question answering, and visual grounding. The grad-CAM visualizations generated by the model could also be used to gain insights into the model's decision-making process and improve the interpretability of vision-language systems. Things to try One interesting aspect of the albef model is its ability to perform well on a variety of vision-language tasks without extensive fine-tuning. This suggests that the model has learned robust and transferable representations that could be leveraged for a wide range of applications. Researchers and developers might consider exploring how the albef model's capabilities could be extended or combined with other techniques to create more powerful and versatile vision-language systems.

Updated 5/10/2024